This is a brief overview of a basic performance test. I don't claim that my tests can be extrapolated to anything beyond the scope of this specific test.

Bun is the latest addition to the JavaScript runtime scene, challenging the dominance of Node.js in backend applications. Similar to another competitor, Deno, it compiles TypeScript by default. However, Bun promises to be significantly faster than Node.js and to serve as a direct, 1:1 replacement for Node.js. Back in September, when Bun reached version 1.0, I conducted a preliminary test with one of our APIs. The results showed similar performance to our existing Node.js (Express.js) app but with a 100% increase in memory usage. This wasn't a great start, but I hadn't implemented any of Bun's optimizations, and there were some bugs causing memory leaks. I decided to wait a bit longer until Bun had matured more.

A couple of days ago, I discovered ElysiaJS. Elysia uses Bun as its default runtime and boasts performance figures that seem almost unbelievable. The framework looks so clean and fun that I decided to test it and see if it's worth further exploration.

For my tests, I plan to reimplement one API endpoint from our existing Express.js application. Our current primary challenge is request latency. We primarily focus on delivering sensor data with 'low latency' to user dashboards or apps. A commonly used request in our service typically takes around 700ms (eww..). We've had to delay implementing more features because they would further increase this latency. Therefore, reducing latency would be incredibly beneficial.

The request I plan to use for this benchmark will involve the following steps:

Authenticate the user using a JWT (JSON Web Token).

Make two requests to MongoDB: one to fetch the user's credentials and another to retrieve the devices the user has access to, using Mongoose.

Execute a SQL request for each device using Postgres.js (PostgreSQL).

Convert the data to a format that's more user-friendly for the frontend.

Append additional data from a lookup table.

Return the JSON payload, which is approximately 9kB in size.

All of this will be served behind an Nginx load balancer inside a docker inside our Kubernetes cluster

So let's start to implement the API in bun/elysia. First, we need to install bun and start a bun project. Thanks to "bun create" templates this can be done in seconds.

curl -fsSL https://bun.sh/install | bash # install bun

bun create elysia app

cd app

My basic Elysia app looks roughly like this (censored):

import { Elysia } from "elysia";

import { swagger } from "@elysiajs/swagger";

const config = require('../config/config'); // a config object

import postgresjs from 'postgres';

const mongoose_models = require('./get_mongoose_models');

const mongoConnection = await require('./get_mongoose_connection')(config.DB.MONGODB);

const Entities = require('./Entities'); // a data service class

const app = new Elysia()

.use(swagger()) // will automatically generate openAPI specification!

.decorate("mongoose_models", mongoose_models(mongoConnection)) // will append the mongoose-models to each request

.decorate("postgresjs", postgresjs({

host: config.DB.MAIN.HOST,

port: config.DB.MAIN.PORT,

database: config.DB.MAIN.DATABASE_NAME,

user: config.DB.MAIN.USERNAME,

password: config.DB.MAIN.PASSWORD,

})) // will append postgres instance to each object (uses a singleton)

.decorate("config", config) // append config to reach request

.decorate("entities", new Entities()) // append data service

.get("test", async (req) => { // add endpoint

// you can use req.mongoose_model and all other decorators here

// HERE WAS MY ENDPOINT CODE (CENSORED)

return { my: "payload" };

})

.onError(({ error }) => {

console.error(error);

})

.listen(3000)

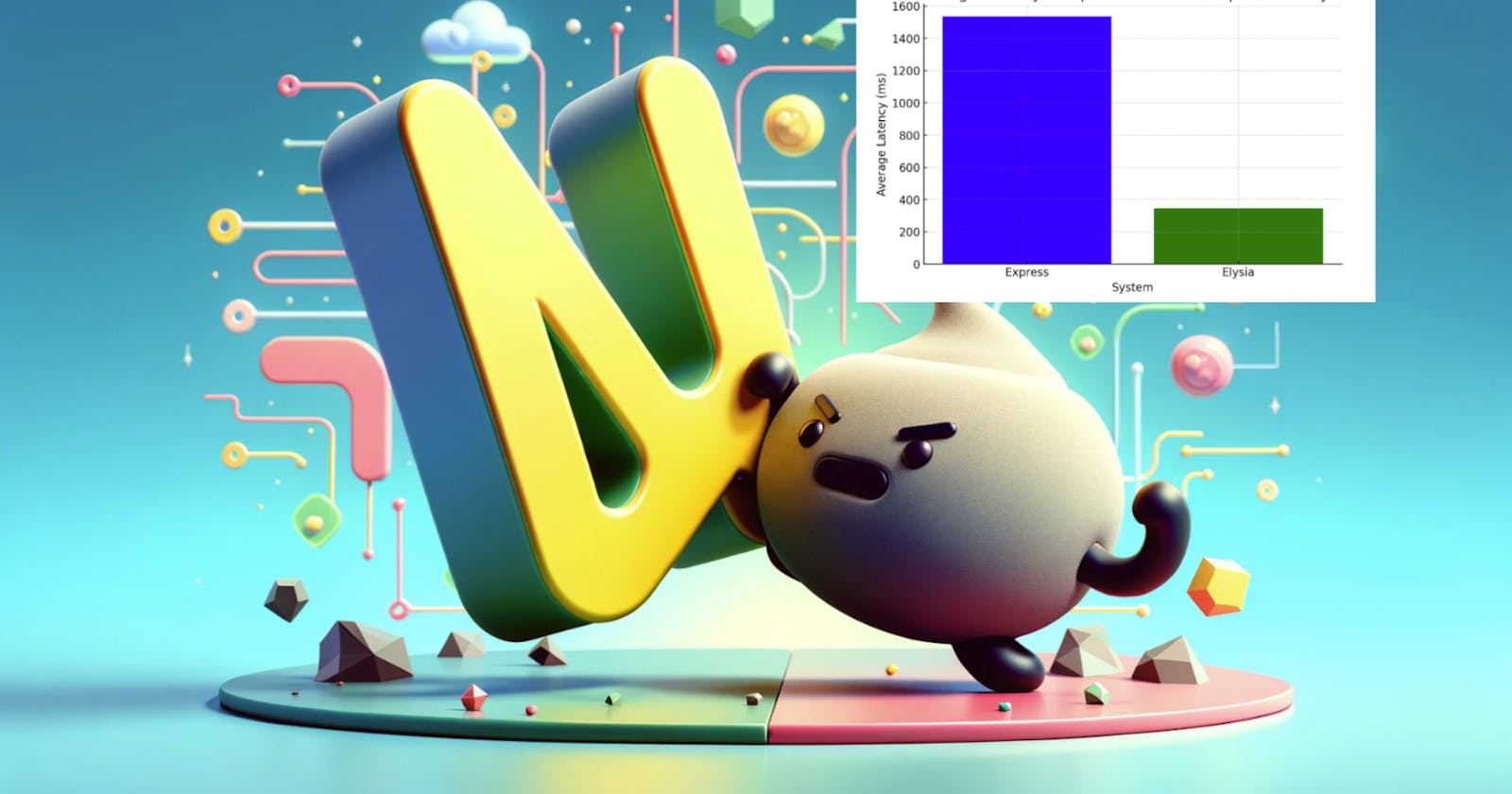

So, what about the performance? To evaluate this, I'm using Autocannon for request benchmarking, comparing it to our existing Express.js application :

That was unexpected!

First of all, I can't quite explain why the original Express.js application performs so poorly with Autocannon. Normally, a single request responds in around 700ms. However, Autocannon records an average response time of about 1535 ms, while Elysia impressively clocks in at 346 ms! That's 4.4 times faster than the Express.js app under Autocannon, and even when compared to the usual 700ms response time, it's still about twice as fast.

Update: For this comparison, I excluded some features from the Express app that weren't present in the Elysia prototype. Notably, the Express app uses a request logger. This may introduce a significant overhead. As I continue to explore Elysia, I'll keep an eye on how the narrative shifts when a 1:1 feature comparison is reached. Should there be notable changes, I plan to update this article.

Initially, I thought a 20% performance improvement would be good enough to justify further investigation, but a 2x increase is definitely a strong incentive for me to dig deeper!

So, what's my takeaway?

Elysia is remarkably fast, approximately 2-4 times quicker than our existing application.

There seems to be an issue with the Express.js app that causes significant performance degradation in Autocannon benchmarks. ;).